Stop trying to make Etched happen.

If you raise $120M, at least try to write an honest press release.

This isn’t the first time I’ve written about Etched, the chip startup claiming to “burn transformers into silicon.” Back then, Etched had only raised about $5M in a small seed round, so I wasn’t too harsh -- I mostly used their pitch as a jumping off point to explain why trying to beat Nvidia through specialization is a bad idea. Nvidia is already building highly specialized tensor cores to accelerate matrix multiplication, and they have armies of incredibly talented engineers working hard to optimize every little bit of their silicon. I recently met an engineer with a PhD whose entire job is to reduce the glitching power consumption in Nvidia’s logic circuits. Nvidia has so many engineering resources that it makes competing directly against them incredibly difficult. I wouldn’t put my money behind any company trying to compete with them head-on.

Apparently a lot of venture capitalists disagree with that assessment, though. Etched just raised a $120M to bring their first chips to market. This announcement was accompanied by a blog post explaining how they plan to take down Nvidia. That blog post was, to put it kindly, potentially disingenuous. Let’s walk through why.

The Etched Announcement

On June 25th, Etched announced that they’re “making the biggest bet in AI.” They’re making a chip that can only run transformers. They start off by admitting that, if transformers go away, their silicon is basically garbage. Points for honesty, I guess?

Then, they survey all of the existing AI chips and deem them “too flexible.” Now, Etched isn’t wrong that chips like Groq’s LPU and SambaNova’s SN40L are flexible. But their evidence that this flexibility is a real cost is tenuous at best.

No, AI is not like Bitcoin mining.

Etched motivates their transformer ASIC by looking at the history of Bitcoin mining, which moved from CPUs, to GPUs, and finally to dedicated ASICs optimized for only Bitcoin mining. And this isn’t the first time Etched has alluded to Bitcoin miners as an example of why single-purpose ASICs are great. But it’s not a fair comparison: Bitcoin mining is a much more specialized workload than transformers are, and GPUs makers never tried to compete in the mining market.

The SHA-2 hashing algorithm, the heart of Bitcoin mining, is built around incredibly specialized operations like modulo addition of integers, bit-shifts, and bitwise operations like XOR and AND. In general, CPUs and GPUs are not very good at those sorts of operations; it’s why dedicated cryptographic hardware has existed since the 1970s. And Nvidia actively wanted to avoid the mining market; as a matter of fact, they implemented anti-mining features on GPUs. This made it super easy for ASIC miners like Bitmain to take the mining market by storm.

On the other hand, AI models rely on floating point matrix math, an operation which is much more general -- and an operation that GPUs are already extremely good at. And the GPU makers like Nvidia are aware of this and are doubling down: they’re already optimizing their hardware for transformers. This means that ASICs have much tougher competition.

Also, AI models need more flexible hardware even if they’re only running transformers. Different transformer models may have different dimensions, which requires reprogrammability. It’s not like Bitcoin miners, which need to run a single, fixed operation as fast and efficiently as humanly possible. Just because GPUs and other programmable accelerators are inefficient for mining Bitcoin doesn’t mean they’re inefficient for AI.

No, control logic is not a huge overhead.

Etched makes the claim that only 3.3% of the transistors on an Nvidia H100 are used for matrix multiplication. Not only is this false, but it’s also misleading. Etched is trying to imply that most of the remaining 96.7% of the chip is devoted to control logic that they can eliminate. In reality, most of that 96.7% is devoted to features that Etched also needs, and to other compute elements that help run transformers on the H100.

Sure, only 3.3% of the transistors on an H100 die are part of the core logic path of the tensor cores. But each tensor core will also feature “pipeline registers,” which hold data that’s headed into the core and data that’s coming out of the core. Etched also needs to include pipeline registers in their design to actually get data into and out of their cores.

Also, the H100 is more than just tensor cores. Sure, they have a few hundred tensor cores which are amazing at matrix multiplication. But they also have 14592 CUDA cores, which are also very effective at matrix multiplication, as well as at many other operations that transformers need, like dot-product attention. The H100 has many, many computational resources that can be devoted to running Transformer models outside of just the tensor cores.

What about transistors that aren’t devoted to tensor cores, CUDA cores, or registers to get data in and out of those cores? Well, a lot of them are devoted to memory.

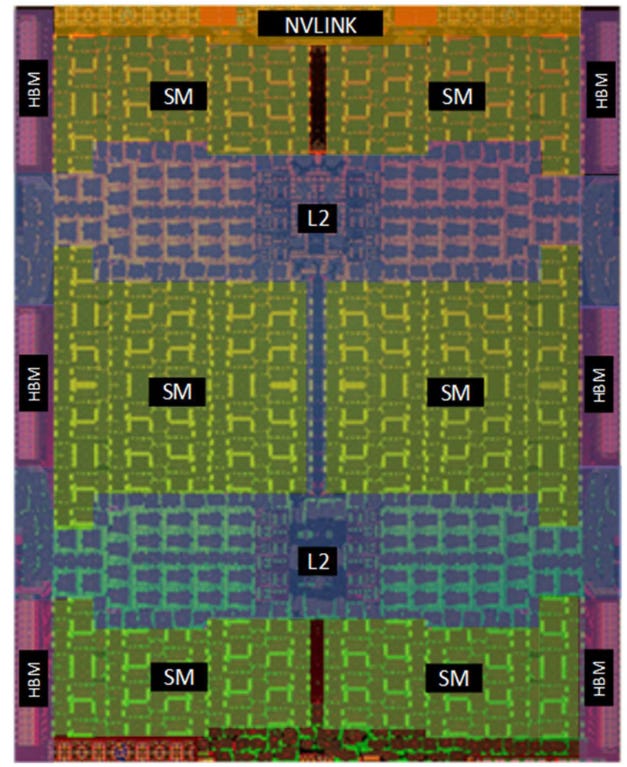

If you look at the floorplan of an A100, NVidia’s previous generation chip, a huge portion of the die is dedicated to memory! Everything highlighted in blue above is the L2 cache, the largest memory banks on the chip. There are also smaller memory units distributed throughout the streaming microprocessors (SM). This memory hierarchy is important because it ensures that each core can be adequately supplied with data to process, rather than sitting idly by waiting for data to show up. Etched’s chips will face the same challenges, so they’ll need the same memory.

Ultimately, most of the transistors on a GPU are not part of the control flow logic. There are multiple core types, as well as registers and memories, that take up a lot more space than the core logic path of the tensor cores. All of those components play a role in making a GPU actually run transformers -- and Etched will need those components too.

Speaking of memory, most transformers are bottlenecked by their ability to keep their processing cores supplied with data, not by their ability to actually process that data. Etched makes the claim that they are going to be avoiding this “memory bottleneck” by processing data in batches. But that may not be the best idea.

Larger batch sizes increase latency.

One of the magical things about using GPT-4o, OpenAI’s newest model, is how fast it is. You ask it a question, and in a second or so it gives you an answer. And once you’ve used a fast model, it’s very hard to go back to a slower one. That’s why latency is one of the most important metrics for getting AI applications practically adopted.

When performing LLM inference, each input needs to be multiplied by a large number of weight matrices to generate an output. If you want to minimize latency (the time it takes from one input to its corresponding output), you have to perform that entire calculation for each input as soon as that input arrives. But the weight matrices are too large to fit in the on-chip memory, which introduces a memory bottleneck -- the limiting performance factor is the ability to load all of the new weight matrices onto the chip.

Batching data, like Etched does, means taking multiple inputs and processing them at the same time. That means that for every weight matrix you load onto the chip, you can process multiple inputs with that weight matrix before you have to go fetch the next weight matrix. This increases your throughput (the number of inputs you can process per second), but makes your latency slower. That means that when a user asks the model to answer a question, it would take longer to start generating an answer.

By focusing on large batch sizes, Etched is trading latency for throughout, which may limit the commercial adoption of products using their silicon.

Takeaways

Etched is right that they’re making a big bet. And with this announcement, they’ve made a big splash. Claiming 20x greater performance is sure to grab people’s attention. And honestly, Etched may actually be able to offer some performance improvement over Nvidia on some applications. I don’t think it’ll be close to 20x, but I think it’s possible that they achieve a 1-5x performance-per-watt improvement over Nvidia.

But to actually get customers to switch from Nvidia hardware, you need to offer a compelling enough performance benefit so that customers will replace their existing expensive infrastructure with your new hardware, and also offer enough software support to make that transition seamless. Etched barely discusses their software at all, besides the claim that it will be simple because they only support transformers.

Ultimately, I have significant doubts that Etched will be able to offer sufficient performance or software support to actually win over customers who are currently using Nvidia silicon. And this announcement only made me more skeptical. Etched’s announcement features a lot of disingenuous claims about how specialization helps them, which doesn’t inspire much confidence. It also lacks the content that would convince experts: actual information about how the chips work and what performance they can really achieve.

Whether or not their silicon works, this isn’t the way they should announce it. Instead of making questionable claims about GPU transistor utilization, they should publish an architecture guide and make it clear from an engineering perspective why their architecture works well. Until then, stop trying to make Etched happen.