Most AI chips aren’t cost-effective.

Are d-Matrix’s performance numbers too good to be true?

On November 19th, d-Matrix announced that Corsair, their first commercial chip, is shipping to early customers. They also announced some impressive performance numbers; for Llama2 7B, they showed 20x faster time-per-output token compared to Nvidia’s H100. At first glance, it may look like we have a GPU-killer on our hands. But, as with most AI chip companies, the public performance numbers are only part of the story. d-Matrix has impressive technology and impressive performance, and is probably the right choice for some customers, but it certainly doesn’t replace GPUs for many use cases.

d-Matrix is building chips based on a digital processing-in-memory (PIM) architecture. Compared to companies like Groq, MatX, and SambaNova, this makes d-Matrix’s chips much more technologically novel and interesting. Obviously, novel does not mean better, but I am certainly excited to see a startup successfully commercializing a new kind of chip. I also love seeing processing-in-memory technology be successful; earlier this year, I sold the PIM-for-cryptography company I founded back in 2021.

Unfortunately, if you read d-Matrix’s whitepaper, some cracks emerge in the exciting narrative. Even though d-Matrix’s chips are exciting and novel, they may not be cost-effective for most customers. Specifically, their impressive benchmarks all rely on the chips operating in “performance mode”, which may not happen in practical infrastructure deployments.

“Performance Mode”

All of d-Matrix’s most impressive performance numbers note that the chip is running in “performance mode”. This is where d-Matrix claims to shine; according to their own whitepaper, Corsair’s performance falls off precipitously as it goes from performance mode to “capacity mode”.

Carefully reading the whitepaper reveals that performance mode refers to the regime where the entire model can be stored in on-chip SRAM. Those of you who remember my article on Groq probably know where this is going. If you want to fit a large model entirely in on-chip SRAM, you’re going to need a lot of chips, which makes your infrastructure much more expensive.

In capacity mode, d-Matrix stores extra model weights in off-chip DRAM. They don’t have any HBM in their systems, which means that once a model is too large to fit in SRAM, they take a significant performance hit. This is probably why they’re not widely sharing any capacity mode performance numbers; I’d expect them to be lower than what Nvidia can achieve with their HBM-equipped H100 GPUs.

This means that building high-performance d-Matrix systems is going to be fairly expensive. If an API provider wants to serve many different fine-tuned models, or wants to serve extremely large models like Llama 405B, they need to purchase many racks of d-Matrix systems to get acceptable performance.

Luckily, d-Matrix seems very aware of this limitation and is clearly doing their best to minimize the impact of their all-SRAM strategy on their system’s cost effectiveness. Even in their so-called performance mode, they can deploy Llama 70B entirely in SRAM in a single rack. For context, running Llama 70B on Groq hardware takes 8 racks.

How’d they do it? By leveraging new number formats, and extremely dense compute.

Block Floating Point Inference

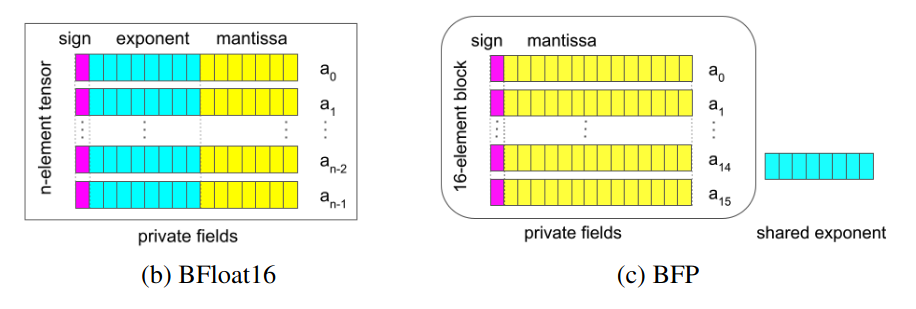

In 2023, an industry consortium including Microsoft, Nvidia, and Meta proposed a suite of new data formats called the Microscaling Data Formats. These new formats included the so-called block floating point formats, like MXINT8 and MXINT16.1 These formats have multiple integer values that share an exponent, like so:

These new data formats are only supported by a handful of chips, including some of AMD’s edge AI NPUs, Tensorrent’s Grayskull and Wormhole chips, and, most notably, d-Matrix’s Jayhawk and Corsair chips. MXINT8 clearly reduces the memory format of machine learning models; by replacing many different exponents with a single shared exponent, you can cleanly eliminate a lot of bits that need to be stored on-chip. This is one of the reasons why d-Matrix requires fewer chips than Groq does to deploy entire models in on-chip SRAM.

But block floating point is really important to d-Matrix for another reason, too. Conventional floating point math is fairly difficult for processing-in-memory chips. To add floating point numbers, you need to convert them so they have the same exponent. This involves bit-shifting the mantissa, which is difficult to do in parallel inside of a memory array, because each mantissa may need to be shifted by a different amount.

With MXINT8 math, d-Matrix can leverage processing-in-memory cores, which offer extremely high efficiency for fixed-point operations, to accelerate the floating-point computation required by large language models. This is important, because processing-in-memory lets d-Matrix deploy chips with far more compute density and memory density than their competitors.

The Value of Compute Density

Groq’s cards each offer 230MB of on-die SRAM. d-Matrix’s cards each offer 2GB. How does d-Matrix manage to offer 10x more SRAM than one of their close competitors? The answer is processing-in-memory, and the incredibly high density that it offers.

In GPUs and conventional AI chips, neural network weights, inputs, and parameters are stored in on-chip memory. When you want to compute with those weights, you have to move them from memory into registers, where they’re stored while you use them for math. Moving data around between memory and registers takes time and energy, and those registers themselves take up area on your chip.

Processing-in-memory (PIM), also known as compute-in-memory (CIM) or in-memory computing (IMC) tightly integrates SRAM memory and computing logic into a single, very dense block:

With a PIM architecture, you don’t need to transfer data from memory to registers and back. Instead, computation is performed directly on the data while it’s still in memory. This eliminates a lot of power that’s normally required to shuttle data back and forth between memory and registers. Most overviews of PIM emphasize this power efficiency as the key value the technology provides.

But PIM also offers significant advantages in terms of compute density. By eliminating large and expensive registers and ALUs, PIM architectures can dedicate more chip area to on-chip SRAM. More on-chip SRAM means fewer accesses to off-chip DRAM. And because DRAM access is the biggest latency and power efficiency bottleneck for AI chips, denser compute can significantly improve performance at scale.

This is why d-Matrix can achieve with one rack what Groq needs eight racks to do. d-Matrix’s PIM fabric is dense and power efficient, so they can get 10x more on-chip memory for every card, and deploy models with lower TCO than other AI chips.

Are d-Matrix’s chips cost-effective?

No matter how exciting a technology PIM is, I’m not sure if it makes d-Matrix’s chips cost-effective. Customers still need to be willing to buy an entire rack’s worth of chips to run Llama-70B efficiently. This might make sense for some customers who are willing to pay a large upfront cost for ultra-low-latency inference, but it probably won’t make sense for the vast majority of potential customers.

However, I think d-Matrix is blazing a path towards much more efficient AI compute. By combining block-floating-point math and an ultra-dense PIM architecture, d-Matrix can make their chips much more cost effective than their closest competitors, Groq. If d-Matrix introduces HBM into their future architectures to boost performance on models too large to fit in SRAM, I think they could be a compelling player in the AI chip world going forwards.

Yes, MXINT8 is a block floating point format. It’s a dumb name, sorry.

Deepseek

Unlike Groq, d-Matrix Corsair is based on CIM, which means that its SRAM is serving as both an ALU and a memory cell. 99% Corsair chip should be composed of SRAM, that is the main reason why Corsair has 10X SRAM capacity v.s. Groq.