How to Privately Query Cloud AI Models

Obfuscated Queries, explained.

Let’s say you’re building a medical device, and you want that device to have AI capabilities built right in. It could answer questions, or examine patients, or collect and analyze data. But the most powerful AI capabilities require large, powerful GPU clusters running AI models operated by companies like OpenAI and Anthropic. You can’t just send your data to these third-party models -- that’s a privacy nightmare! But if you commit to running your models entirely on-device, your device’s AI capabilities are going to be significantly limited. If only there was a way to send queries to a cloud AI API, privately.

Obfuscated Queries could solve that problem! Originally developed to help improve the privacy of search engines, query obfuscation technology converts your original, private queries into one or more “obfuscated” queries. These obfuscated queries are crafted to ensure that the service you’re querying can’t reconstruct your original private query. A similar technique can be used for AI models. We’re going to focus on simple classifier models here, but with more research, this could even be scaled up to LLMs!

Linear Approximation of Neural Networks

Let’s start by formalizing the problem a bit. We have a neural network, as well as a hidden query. We want to approximate the network’s output for the hidden query, without revealing the hidden query to the network.

Our obfuscated query methodology is going to treat the network as a black box. This is important in practice, because when you’re submitting your hidden query to a cloud-hosted model, you usually don’t have access to the model itself. But if we don’t know anything about the model, how can we keep our hidden queries actually hidden? The answer is simple. We simply treat the neural network like an arbitrary nonlinear function. Then, we can interpolate the approximate output value for an input value using one of the oldest tricks in the book: linear approximation.

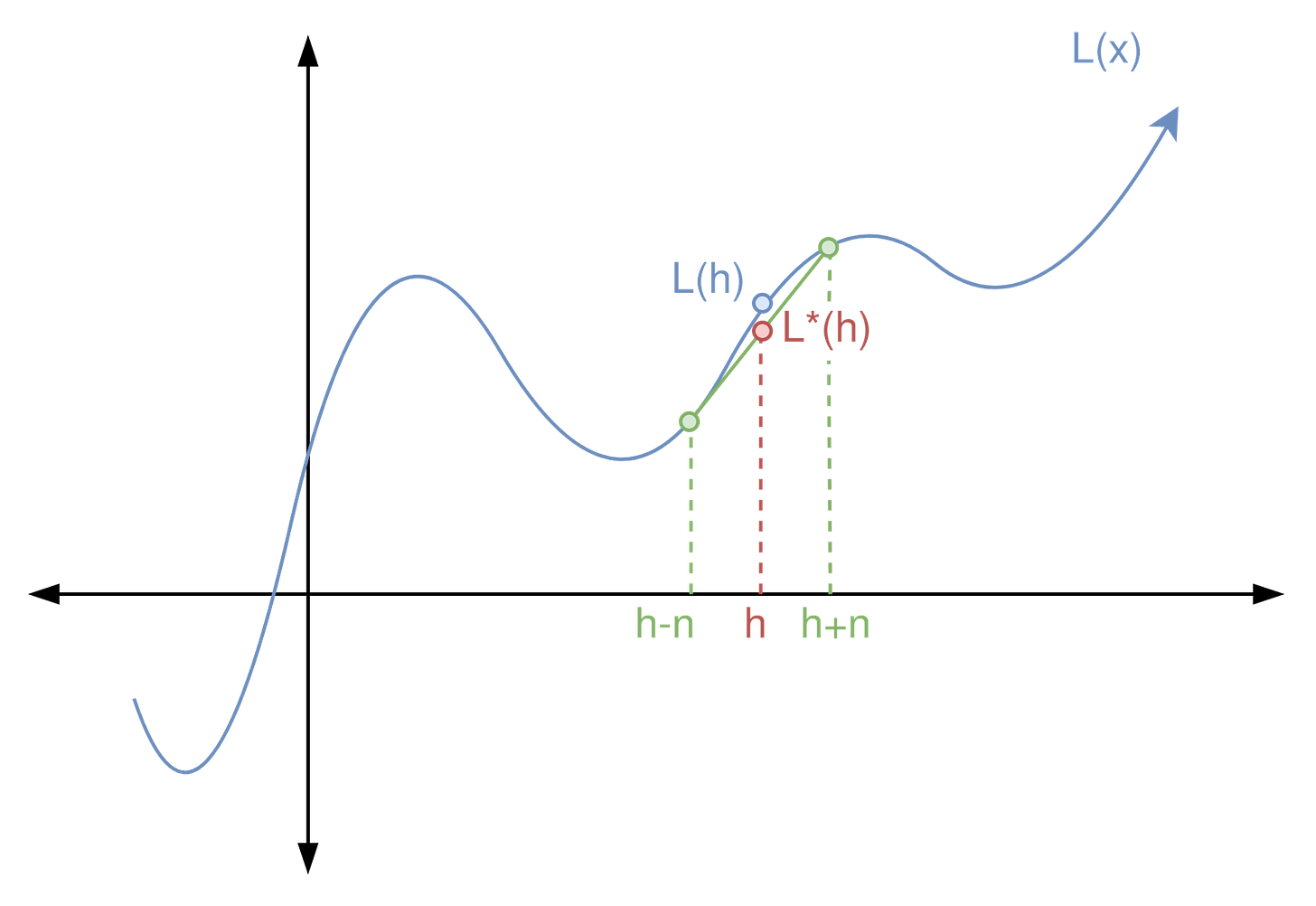

To visualize this, let’s think of our network as a simple, single-variable nonlinear function L(x). In this case, we want to approximate L(h) for some hidden value h, without actually plugging h into L(x). Instead, we calculate L(h+n) and L(h-n) for some small “nudge” value n. Then, we can approximate h as L*(h) = (L(h+n) + L(h-n))/2.

Extending this idea to an entire network just entails moving from a single input variable to a more complex function with many-dimensional inputs and outputs. We need more “nudged” queries, and performing a weighted average is more complex, but the core intuition is the same. In this article, I’m going to refer to these “nudged” queries that are similar to, but not the same as, our hidden query as related queries.

Adding Distractor Queries

At this point, we’re able to submit multiple related queries to a model, interpolate their outputs, and approximate the expected output for our hidden query. But some of you may have noticed that there’s an issue here. Because all of our related queries are similar to our hidden query, the cloud AI provider could simply interpolate the related queries to recover something similar to our hidden query. And that defeats the whole purpose of obfuscating our hidden query in the first place.

Most papers in query obfuscation for search, including Bollegala et. al, solve this in a straightforward way. They generate a set of extra queries, called distractor queries, that are unrelated to the hidden query. Because the cloud AI provider is unaware which queries are distractors and which queries are related, this makes it hard for them to reconstruct the hidden query.

In practice, determining which distractor queries we should use is nontrivial. There are many requirements distractor queries need to satisfy; for example, you need to use enough distractor queries, and they need to be distributed such that they mask the distribution of our related queries. There are also more straightforward requirements; your distractor queries can’t be obviously incoherent or they could easily be filtered out.

For simplicity, we’re not going to be delving into the specifics of generating distractor queries in this article. If you’re interested in reading more about distractor queries as applied to search, I’d recommend taking a look at:

Proxy-Terms Based Query Obfuscation Technique for Private Web Search, Bashir et. al.

Efficient Query Obfuscation with Keyqueries, Frobe et al.

Query Obfuscation by Semantic Decomposition, Bollegala et. al.

Putting it All Together

We applied our linear approximation algorithm to a simple multi-layer perceptron, trained to recognize handwritten digits from the MNIST dataset. We varied the number of related queries submitted to the network, as well as the distance from the related query to the hidden query. For simplicity and to reduce cost, we did not implement distractor terms.

Our full implementation works like this:

We take our hidden query image and add a nudge factor N, which is represented by random noise.1 We repeat this process Q times to generate Q related queries.

We submitted our related queries to the model, and generated a set of Q probabilities for the output.

We average those probabilities to get our expected probability distribution for our hidden query.

An example of a related query with a relatively large nudge factor is shown below. If you look carefully, you may be able to tell that the hidden query was a zero, but it’s somewhat obfuscated by noise. When combined with a set of distractor queries, it would be difficult to reconstruct what the original hidden query was.

In practice, this actually works somewhat well! When we compare our obfuscated model to a normal model, we see the accuracy decrease as additional noise is added, but the model is still capable of performing classification tasks somewhat well.

We also provide a baseline to compare our linear approximation method to. In the baseline, only a single related query is classified, rather than averaging the output probability of multiple related queries. Our linear approximation method outperforms the baseline, especially at high noise levels. The code used to generate these plots is provided here.

While obfuscated queries decrease model performance slightly, the model is still able to successfully classify user queries in a useful way. For privacy-conscious use cases in medicine, banking, and defense, this small performance compromise may be worth it if we want to improve the privacy of queries.

Future Work

This work is simply a proof-of-concept showing that we can reconstruct a hidden query using a set of related queries. Important pieces of a fully-functional query obfuscation pipeline are missing -- most notably, distractor terms. The first step to making this into a practical methodology would be to develop a method to insert distractor queries in a way that helps hide the distribution of the related queries.

One major step forward would be to extend this work to apply to other models, like LLMs. For an image classifier, it’s very easy to generate related queries by adding small amounts of noise to the input image. However, it’s harder to add noise to text. In theory, one could tokenize the input text and nudge each token in semantic space, but this process would be fairly complex and have a number of potential failure cases.

I think LLM query obfuscation is worth investigating, because this kind of query obfuscation requires far fewer resources on a users’ device compared to an on-device LLM. For edge devices, query obfuscation offers high LLM performance with low resource consumption without sacrificing privacy. I hope more work is done so that this technique fully matures!

The noise needs to follow a distribution such that, when it’s combined with the distractor queries, the true query stays hidden. In my example, I’m using a uniform distribution, but I’m unsure if this is the best distribution to use in practice.