Why is it taking so long to build new IP cores?

Standards are getting more complex and harder to satisfy.

The USB4 specification was first released in 2019. It took a year for the first USB4 IP cores to hit the market. Even worse, it wasn’t until 2025 that the first USB4 IP was fully certified by the USB implementers forum. And USB4 isn’t the only connectivity protocol where there was a major lag between the specification being finalized and the first IP being available.

PCIe 4.0 was notably delayed; the PCIe 4.0 standard was released in 2017 but it took AMD and Intel until 2019 and 2020, respectively, to ship CPUs with PCIe 4.0 support. It got to a point where a new industry consortium spun up to find alternatives to the delayed standard. Similar stories exist for DisplayPort 2.0: it was released in 2019, but major vendors like Synopsys don’t even offer DisplayPort 2.0 cores at all in 2025.

And it’s not just connectivity IP cores that often experience major delays from specification to commercial release. The same is true for video codecs: HEVC H.265 was released in January 2013, but IP didn’t hit the market until 2014, and only from small, specialized video IP vendors. AV1 was standardized in 2018, and it took until late 2020 for IP to hit the market. VVC H.266 was standardized in 2020, and IP wasn’t released until 2024.

In the last decade or so, we’ve seen more and more examples of commercial IP releases lagging significantly behind the release of corresponding specifications and standards. This is becoming a major problem; widely available IP cores are necessary for an ecosystem to grow around a new standard. For example, if DisplayPort 2.0 IP isn’t available, it becomes harder for CPU, GPU, and display vendors to justify building DP 2.0 capabilities into their devices, because other devices consumers own won’t support DP 2.0. The same is true for video codecs, cryptographic algorithms, and memory interfaces.

But why is it taking so much longer to build new IP cores?

Standards are getting more complex

First off, the standards that IP vendors are expected to implement are getting more and more complex over time. Let’s take a look at a state machine from the USB 1.0 specification, released in 1996. The entire spec is a little over 250 pages long, and includes everything you need to know about USB 1.0, from the logical protocol to the electrical specifications to the mechanical drawings of the connector. This state machine handles “bit stuffing”, which ensures that the data doesn’t have too many consecutive 1’s or 0’s.

This single diagram includes basically all of the information you need to implement bit stuffing on USB 1.0. It’s a simple and effective solution for the data transfer speeds that USB 1.0 runs at.

USB 4.0, on the other hand, is a much more complex specification. The core spec is over 800 pages long, and that doesn’t include many of the mechanical specifications of the connector. USB 4.0 also has a method to prevent long runs of consecutive 1’s or 0’s, but it’s much more complex. It uses a scrambler, which randomly shuffles data according to a pseudorandom number generator, or PRNG. There are many pages of specifications for this scrambler, as both the transmitter and receiver need to have their PRNGs synchronized to ensure the message gets properly encoded and decoded. Below is a diagram of how USB 4.0 handles re-synchronizing the transmitter and receiver’s scramblers, depending on what mode it’s operating in.

As you can see, USB 4.0 is already a lot more complex, and this is just a single diagram that explains a single part of the scrambler resynchronization process. There are pages and pages of additional information on how to properly implement this feature, and it’s one of many, many complex features in the USB 4.0 specification.

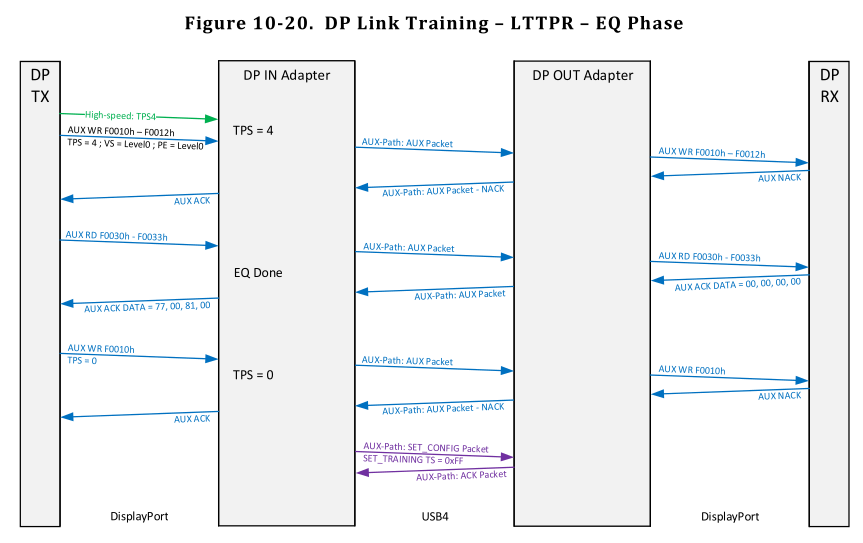

USB 4.0 also has entirely new features, like link training. This is a complex handshake between the transmitter and the receiver to tune their equalization settings to make the communication link more reliable. USB 1.0 didn’t need this at all, as it was simple and low-speed enough to get away with straightforward, constant equalization methods. But USB 4.0 has multiple different link training methods, depending on whether you’re using the USB 4.0 bus to transmit DisplayPort data or regular USB3.0-style serial data.

All of these features are really important, though. USB 4.0 can run at up to 80 Gbit/s, while USB 1.0 only ran at 12 Mbit/s. That means that USB 4.0 is over 6000 times faster than USB 1.0, and those performance gains had to come from somewhere. To reliably transmit data so much faster, much more complex transmitter and receiver architectures are necessary. But that makes the process of designing IP cores for these new standards that much harder. And it gets even harder when IP core vendors have to support so many different IP cores at once.

IP vendors are stretched thin

IP vendors have large, complex product portfolios they need to maintain. Even though USB 3.0 and 4.0 have been released, some low-speed, low-cost devices still use USB 2.0 IP cores, and IP vendors are often expected to support those legacy protocols alongside more modern ones. Maintaining those cores isn’t free, though. Often, customers will want tweaks to customize legacy IP cores, which requires engineering effort that could otherwise be spent developing new IP cores to meet new standards.

As an example, Synopsys has an incredibly wide portfolio of IP cores, and about 20,000 employees. Of course, some of those employees work on Synopsys’ EDA products, but a good portion of them work on IP development. The overhead of managing that many employees to maintain that many products is huge, and prevents Synopsys from moving quickly to assign their best and brightest employees to tackle IP core development for cutting-edge standards.

But this also creates opportunities for startups, who don’t have that overhead, to find niches where they can outcompete those big players like Synopsys.

Opportunities for disruption

As new standards for connectivity, cryptography, video compression, and memory get released, new opportunities for startups are created. As I mentioned, large IP companies like Cadence, Synopsys, and Rambus are primarily focused on maintaining a large, broad product portfolio. Startups, unencumbered by the need to maintain existing product lines, can focus on out-executing incumbents on one particular new standard.

My last startup, Radical Semiconductor, did this for post-quantum cryptography, and we were acquired in 2024. And we’re not the only example. NGCodec was one of the first providers of FPGA H.265 IP, and was acquired by Xilinx in 2019. PLDA beat many major IP providers to silicon-ready IP for PCIe 5.0 and CXL 2.0, and were acquired by Rambus in 2021.

Most of these IP core startups end up being acquired by an existing IP vendor, rather than becoming a successful IP company in their own right. But potentially, with the advent of AI-powered chip design, a startup could stay at the forefront of new standards and specifications across multiple product lines, and become a legitimate rival to IP giants like Synopsys and Cadence. Either way, regardless of a founder's ambitions, new standards and specifications have historically been great opportunities for startups to disrupt incumbents, and I suspect that trend will continue into the future.

Interesting to compare the clean sweep of UAL with UEC. Both build on the same ethernet PHY but UAL script the protocol on top down to an admirable minimum. However, UEC with its thicker legacy stack can lean on existing switches to get launched. So which is it? Are people going to buy into a cleaner stack or are they going to choose the old mess with new shortcuts in order to do risk management.

Great post! Got me wondering… have there been counter examples? Where there is a new standard that didn’t really end up in products eventually? This could be a significant risk for start ups failing because nobody wants to acquire them.