So, I have to talk about Extropic.

It’s probabilistically nothing, right?

Disclosure before I jump into the article: I used to lead the silicon team at Normal Computing, a thermodynamic computing company that is considered a competitor to Extropic. I don’t work there anymore, but I’m still an advisor there.

After years of doubt and claims that Extropic was a total grift, Guillaume Verdon and his crew finally announced their first product, their XTR-0 devkit. A lot of people are claiming that this vindicates all of Extropic’s claims and “proves the haters wrong”. But that’s not entirely true.

Yes, the folks who claimed that Extropic was a total scam that would die without ever shipping a piece of hardware were wrong. Extropic did design a chip that is capable of running certain small ML workloads with impressive efficiency. That’s cool, but also most PhDs in chip design do at least one or two small ML chips as part of their graduate research. Building an incredibly efficient chip that only works on MNIST is a bit of a punchline at this point.

Also, everything Extropic is doing is building off a large existing body of work on p-bits and probabilistic computing. I’ve been working in the unconventional computing space most of my career, and I’ve been plugged into what’s actually been going on in the world of p-bits, thermodynamic computers, and Ising machines. So today, we’re going to be talking about what Extropic is doing, the current state of the p-bit research landscape, and their novel contributions.

The Current p-bit State of the Art

Extropic makes one claim early on in their paper that I think is extremely unfair:

Additionally, existing devices have relied on exotic components such as magnetic tunnel junctions as sources of intense thermal noise for random-number generation (RNG). These exotic components have not yet been tightly integrated with transistors in commercial CMOS processes and do not currently constitute a scalable solution.

Put simply, this is false. Most state-of-the-art work on p-bits is implemented using digital logic gates, which is highly scalable on FPGAs and commercial CMOS processes. These architectures use digital pseudorandom number generators (PRNGs), which offer an efficient way to generate numbers with sufficient randomness without relying on exotic materials, with the added bonus of reproducibility due to their pseudorandom nature.

There are also a large number of analog architectures out there that are scalable, manufacturable, and don’t use exotic technologies. Not only have researchers demonstrated analog p-bits multiple times over, but there’s an equivalence between noise-injected Ising machines and p-bit samplers. That means that all of the analog Ising machine designs, from ring oscillators to cross-coupled latches, can also act as p-bits -- and they’re all manufactured in commercial CMOS processes.

Hell, if we’re less strict and include non-p-bit sampling architectures, analog and digital CMOS implementations of Boltzmann machines have been around since the 1990s.

Extropic also proposes other probabilistic bit structures that mirror existing work in literature. Extropic’s p-dits and p-modes are similar to current state-of-the-art work on p-dits and programmable Gaussian distributions. To their credit, though, existing work on p-ints and p-dits are digital; if Exropic’s design is all-analog, that would represent a novel design for that specific probabilistic primitive.

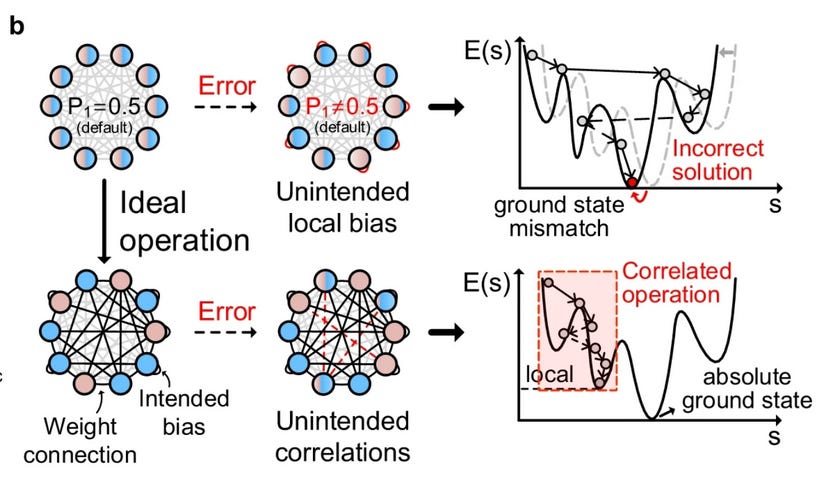

Overall, there are a large number of competing designs for p-bits, all with different tradeoffs. The digital designs are often slightly larger and more power hungry, but are much easier to design, more scalable, and don’t suffer from issues due to process variability that plague analog designs. Analog designs may be smaller and more efficient, but often struggle to implement high-precision energy functions and may make computational errors due to process variations and parasitic effects introducing spurious biases and noise terms.

It’s unclear how much of Extropic’s architecture is analog and how much is digital. It’s also hard to tell how, if at all, they’re addressing issues of process variations in their design. Ultimately, these are the practical issues that plague most analog implementations of Boltzmann machines, and represent the reason why digital implementations are currently dominant in the literature. We’ll see if Extropic has a novel solution to this problem or not.

Comparing to GPUs

Extropic also makes the bold claim that their chips are 10,000x more efficient than a GPU. Unfortunately, these headline numbers are fairly rough estimates for both their hardware, and for GPUs. Because Extropic’s target task is so simple, there isn’t an optimized GPU state-of-the-art model to compare it to. Instead, their GPU estimates measure the power consumption of very small models on large, powerful hardware; this likely results in extremely poor utilization of the GPU and a lot of wasted energy.

At the same time, their analysis of their hardware is fairly optimistic. They only consider the energy consumed by their RNG, resistor network, clock tree, and local wire capacitance. The Extropic team does admit that this is a very rough estimate, but also raises one big question: if this chip actually implements a full Boltzmann machine, why not just measure its power consumption and report it? It could be the case that Extropic’s test chip has p-bit test structures, but lacks the resistor network between them to implement an entire Boltzmann machine on-chip.

One other major concern of mine is that Extropic’s hardware implements bipartite graphs. Not only does this limit your hardware to natively accelerating sparse models, but it also makes your hardware a lot less competitive against GPUs.

The biggest reason why Gibbs sampling is really slow on GPUs is its serial nature. If you try to update multiple states at the same time, you run into weird issues where “frustrated” connections start to cause oscillations in the system state and errors in the result. This is bad for GPUs, which are natively parallel processing units. But when you start working with bipartite graphs or other sparse graphs, this problem gets a lot easier, and you can update all the nodes in each half of the graph in parallel. Restricted Boltzmann Machines, a kind of EBM that relies on sparse graphs, were partially popularized because they can run so much more quickly on GPUs! If you’re only focusing on bipartite graphs, GPUs are actually not all that bad at sampling.

So, what’s new here?

Even though Extropic’s paper makes some questionable comments about the state-of-the-art, they do make two novel contributions to p-bit research. Specifically, they propose a new kind of energy-based model, the Denoising Thermodynamic Model, or DTM, with potentially greater stability and efficiency than conventional EBMs. They also propose a more efficient RNG for their p-bits.

Conventional monolithic EBMs draw samples from a single distribution, often parameterized by a neural network. Extropic correctly points out that this often results in distributions that are extremely hard to sample from. Their DTMs instead perform multiple de-noising steps, similar to a diffusion model, where each step is relatively easier to sample from. This idea isn’t totally novel; it’s similar to other techniques to improve sampling, like conventional diffusion models and counterdiabatic driving, but it’s optimized for the specific properties of Extropic’s p-bit computer.

Their p-bits also use a novel RNG architecture. Current digital implementations of p-bits currently use digital PRNGs combined with LUTs to create a stochastic sigmoid activation function. The most recent, non-state-of-the-art result I found uses 42 look-up tables and 33 registers in an FPGA to represent the p-bit PRNG and activation function. Depending on the process node, an ASIC implementation of the same logic could take up hundreds of square microns of silicon area. Extropic’s RNG is only nine square microns, which is a meaningful improvement!

However, it’s unclear how robust the sigmoid transfer function of their RNG is to process variations, as the only information on process variation provided relates to the energy and lag time. One of the major advantages of the digital PRNG designs is that they get to ignore mismatch entirely. Also, digital PRNG designs are much, much faster than Extropic’s analog RNG. Extropic’s RNG produces random bits at a rate of 10MHz, while digital PRNGs can often hit frequencies approaching 1GHz. Hopefully Extropic’s upcoming physical review letters paper sheds some additional light here.

My take on Extropic

Extropic is doing some genuinely interesting research on p-bits and energy-based models. If their RNGs are actually more efficient than the PRNGs used by existing p-bit architectures, that could offer a meaningful improvement in the number of p-bits you can fit on a single chip, as well as their power consumption.

Also, their results seem to genuinely show that their new Denoising Thermodynamic Models genuinely out-perform more naive implementations of EBMs. That’s genuine research progress! And if their new models are also more performant than conventional diffusion models in a more fair head-to-head comparison with a GPU, that could be an interesting first step towards scaling up EBMs on probabilistic computers.

Ultimately, though, the idea of p-bits isn’t novel. The idea of analog p-bits isn’t novel. Running EBMs using p-bits isn’t novel. Extropic is specifically proposing a new p-bit RNG and a new kind of EBM that could improve the performance and scalability of probabilistic computers. It’s interesting research progress, and would make for a very impressive PhD thesis or two, but it’s not “climbing the Kardashev scale through worship of the Thermodynamic God”, or whatever Gill has been tweeting about these days.

I will give Extropic one thing, though. The limiting factor for p-bit adoption is the lack of interested users and worthwhile algorithms. The sheer amount of hype and interest they’ve generated may bring p-bits from an obscure academic interest for unconventional computing nerds, to something that a much larger crowd of researchers are interested in tinkering with. There’s a chance that turns into a real algorithmic breakthrough that could enable their hardware to run more meaningful, large-scale workloads than just Fasion-MNIST.

"These architectures use digital pseudorandom number generators (PRNGs), which offer an efficient way to generate numbers with sufficient randomness"

by what definition of extremely efficient?

https://youtu.be/dRuhl6MLC78?si=-m_wWvY95RWjVLub&t=2359

Actually, I make most of your points in this talk. Might be worth a watch for anyone that found this post interesting.

Would you be willing to speculate a bit on their first proposal to use Josephson Junction fluxonium qubits to implement p-bits ? They seem to drop this idea for now, but it would've been great if we found a better use for superconducting circuits other than NISQ quantum computers.